ENG | Creative Coding: A Slime Mold Simulation Journey

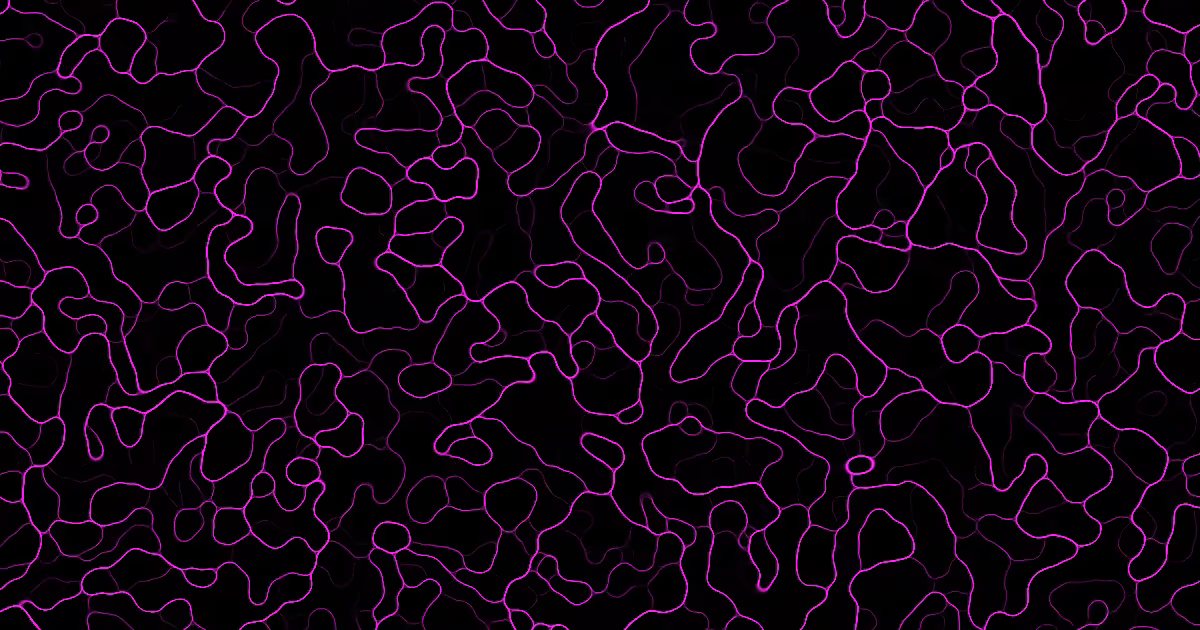

Agent-based simulation where particles leave and follow trails on an evaporating field, forming organic branching patterns. With live demo.

Today I wanted to create some abstract art and noticed it remotely resembles reaction-diffusion pattern which I implemented in C++ few years ago (and it certainly deserves article about optimizations) and which is on my github. So after some discussion what I want, I specified I want something like a slime mold simulation. Slime mould (Physarum Polycephalum) can be seen for example in this YouTube video and similar coding experiment with awesome results is here or here

ChatGPT generated code which required only minor changes such as resolution and number of frames.

Description

The program simulates a group of agents moving in a 2D field. Each agent senses pheromone levels ahead, slightly left, and slightly right, then adjusts its direction to follow stronger signals. As it moves, it deposits pheromone into the field. The field evaporates slowly, so trails fade over time. This feedback loop causes trails to strengthen where many agents travel and disappear elsewhere, producing branching patterns that can merge, split, or fade depending on agent movement.

Code (initial one)

The following code is simple agent-based modeling. Basically all logic is in updateAgents where for each agent, it senses field value ahead, to the left and right, adjusts direction accordingly, moves and increases field value.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

// slime_mold.cpp

#include <vector>

#include <cmath>

#include <cstdio>

#include <cstdlib>

#include <cstring>

#include <ctime>

#include <cstdint> // types like uint8_t

#include <iostream>

constexpr int WIDTH = 1280;

constexpr int HEIGHT = 720;

constexpr int NUM_AGENTS = 120000;

constexpr float SENSOR_ANGLE = 0.5f;

constexpr float SENSOR_DIST = 5.0f;

constexpr float TURN_ANGLE = 0.3f;

constexpr float STEP_SIZE = 1.0f;

constexpr float EVAPORATE = 0.85f;

constexpr int FRAMES = 5000;

struct Agent {

float x, y, angle;

};

std::vector<Agent> agents(NUM_AGENTS);

std::vector<float> field(WIDTH * HEIGHT, 0.0f);

inline int idx(int x, int y) {

return (y % HEIGHT) * WIDTH + (x % WIDTH);

}

float sampleField(float x, float y) {

int xi = ((int)std::round(x) + WIDTH) % WIDTH;

int yi = ((int)std::round(y) + HEIGHT) % HEIGHT;

return field[idx(xi, yi)];

}

void deposit(Agent &a) {

int xi = ((int)std::round(a.x) + WIDTH) % WIDTH;

int yi = ((int)std::round(a.y) + HEIGHT) % HEIGHT;

field[idx(xi, yi)] += 1.0f;

}

void updateAgents() {

for (auto &a : agents) {

// Sample sensors

float sx = std::cos(a.angle);

float sy = std::sin(a.angle);

float c = sampleField(a.x + sx * SENSOR_DIST, a.y + sy * SENSOR_DIST);

float l = sampleField(a.x + std::cos(a.angle - SENSOR_ANGLE) * SENSOR_DIST,

a.y + std::sin(a.angle - SENSOR_ANGLE) * SENSOR_DIST);

float r = sampleField(a.x + std::cos(a.angle + SENSOR_ANGLE) * SENSOR_DIST,

a.y + std::sin(a.angle + SENSOR_ANGLE) * SENSOR_DIST);

// Adjust angle

if (c > l && c > r) {

// Straight

} else if (l > r) {

a.angle -= TURN_ANGLE;

} else if (r > l) {

a.angle += TURN_ANGLE;

} else {

a.angle += (rand() % 2 ? 1 : -1) * TURN_ANGLE;

}

// Move

a.x += std::cos(a.angle) * STEP_SIZE;

a.y += std::sin(a.angle) * STEP_SIZE;

// Wrap around

if (a.x < 0) a.x += WIDTH;

if (a.x >= WIDTH) a.x -= WIDTH;

if (a.y < 0) a.y += HEIGHT;

if (a.y >= HEIGHT) a.y -= HEIGHT;

deposit(a);

}

}

void diffuse() {

// Evaporation only for simplicity

for (auto &v : field) v *= EVAPORATE;

}

void renderToPixels(std::vector<uint8_t> &pixels) {

for (int i = 0; i < WIDTH * HEIGHT; i++) {

uint8_t c = (uint8_t)std::min(field[i] * 10.0f, 255.0f);

pixels[i * 3 + 0] = c; // Blue

pixels[i * 3 + 1] = 0; // Green

pixels[i * 3 + 2] = c; // Red

}

}

void saveTGA(const char *filename, const std::vector<uint8_t> &pixels) {

FILE *f = fopen(filename, "wb");

if (!f) return;

uint8_t header[18] = {};

header[2] = 2; // uncompressed true-color

header[12] = WIDTH & 0xFF;

header[13] = (WIDTH >> 8) & 0xFF;

header[14] = HEIGHT & 0xFF;

header[15] = (HEIGHT >> 8) & 0xFF;

header[16] = 24; // bits per pixel

fwrite(header, 1, 18, f);

fwrite(pixels.data(), 1, pixels.size(), f);

fclose(f);

}

int main() {

srand((unsigned)time(0));

for (auto &a : agents) {

a.x = rand() % WIDTH;

a.y = rand() % HEIGHT;

a.angle = (rand() / (float)RAND_MAX) * 2.0f * M_PI;

}

std::vector<uint8_t> pixels(WIDTH * HEIGHT * 3, 0);

for (int frame = 0; frame < FRAMES; frame++) {

updateAgents();

diffuse();

renderToPixels(pixels);

// Save every 10 frames

if (frame % 10 == 0) {

char filename[64];

sprintf(filename, "frame_%04d.tga", frame);

std::cout << "Saving " << filename << std::endl;

saveTGA(filename, pixels);

}

}

return 0;

}

Useful commands

1

2

3

4

5

6

7

8

9

10

11

12

# Compile (maybe add -O3 -march=native)

# g++ slime_mold.cpp -o slime_mold `sdl2-config --cflags --libs`

g++ slime_mold.cpp -o slime_mold

# Run

./slime_mold

# Preview frames (many image viewers are too modern to open image format from 80s)

feh frame_????.tga

# Encode video

ffmpeg -framerate 30 -pattern_type glob -i "frame_*.tga" -c:v libsvtav1 -an slime_mold.webm

1

2

3

# Encode video on Windows

Get-ChildItem -Name "frame_*.tga" | ForEach-Object { "file '$_'" } | Out-File -Encoding ascii frames.txt

c:\apps\ffmpeg.exe -f concat -safe 0 -i frames.txt -framerate 30 -c:v libsvtav1 -pix_fmt yuv420p -an slime_mold.webm

Optional improvement and optimizations

It’s apparently not the best piece of code - updateAgent() wraps around agent’s x,y coordinates, then deposit() function does it again and idx() function again, this time in inconsistent way which does not handle negative inputs, but … it’s AI generated code.

Interesting fact is also that SaveTGA() is basically good, old, plain C, whereas other functions use std::cos() instead of cos().

Algorithm has quite poor performance. Each agent checks three points (left, forward, right), moves and deposits one point. This means pretty much random memory access and lot of cache misses, especially for large fields. Agents are not sorted whatsover.

However Intel VTune Profiler reveals a few bottlenecks that could be fixed:

std::roundis expensive. This can be fixed instantly is rewriting all(int)std::round(a.x)to(int)(a.x+0.5f).Then there are expensive trigonometric functions which can be replaced by using

dx,dyvector rather thanangleand by changing direction using matrix multiplication.

Video

(Not so) final words

Feel free to experiment with NUM_AGENTS, EVAPORATE and so on. With small field like 640x480 program runs reasonably fast.

Article originally ended here, but code was improved over next two weeks and some evenings.

First versions can be found in projects GitHub repository as slime_mold_early.tar.gz

Addendum (2025-08-02)

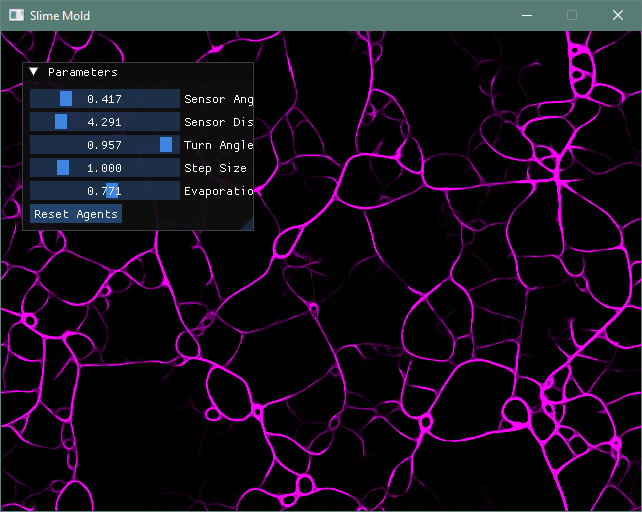

I learned how to use Conan on this example and also added Dear ImGui for changing parameters interactively, first iteration looks like this.

It’s actually much more fun to watch animation and play with parameters than initial static images. Most combinations of parameters are stable, some can converge to either dots, a single line or some kind of fog.

Later versions have side panel rather than floating one and option to change color palette and midpoint which could reveal more details. It runs at 100-200fps on Ryzen 5900X.

For something that is created by AI with slight guidance it’s pretty interesting and fun program that has only few lines. ImGui improved it a lot, because choice of parameters matters and maybe best ones are at the edge of stability.

Addendum (2025-08-08)

Inspired by my colleage (Lenka Racková) and her Chaotic attactors explorer I decided to try port mine to WebAssembly. It took me like six hours total, mainly due to heavy refactoring of existing code to separate logic from UI.

This made code less messy, but directory structure somewhat complicated. The result is here.

Fun fact: this was originally like 120 lines of AI generated code saving series of TGA images. Then I’ve spend like six evenings improving it to something like production quality code with multiple CMakeLists.txt files and build targets. It’s not perfect, there is no HiDPI scaling, proper error handling, … and I still want to learn QtQuick/QML on something.

Bonus: Live application using WebAssembly

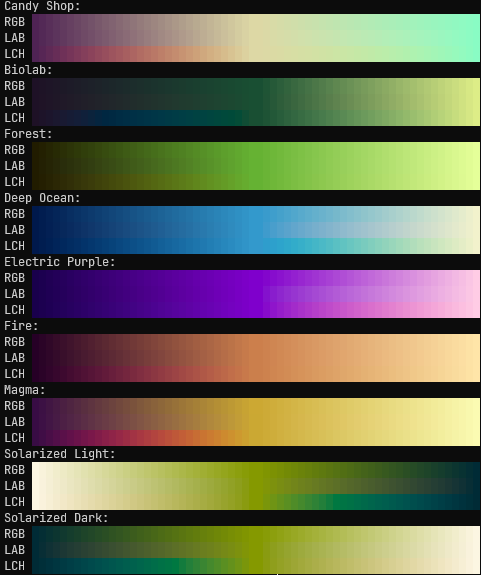

The byproduct are color palettes and interpolations. Only LCH interpolations were left in GUI, because changing hue gives less boring color maps. This could be actually useful for other projects.

Brief project history:

- Simple AI generated code, video

- Add SDL2 for live visualization

- Port to Windows, add CMake and Conan

- Upgrade to SDL3 for curiosity

- Add ImGui for changing parameters

- Add to public GitHub

- Change dock to side panel

- Add color palette

- Add presets for color maps and simulation

- Port to WebAssembly

- Exploration of color spaces, conversions and gradients

Further ideas

- Multiple agents following/avoiding others

- Implementation in GLSL shaders

- Once done upgrade to 3D with volume raycasting

Lessons learned

- Idea of branchless logic using bitmasks (need to explore this further)

- OkLab, OkLch colorspaces and Lch gradient interpolation

- Basics of ImGUI and SDL

- Using SDL via Conan on Windows

- Crosscompiling project to WebAssembly